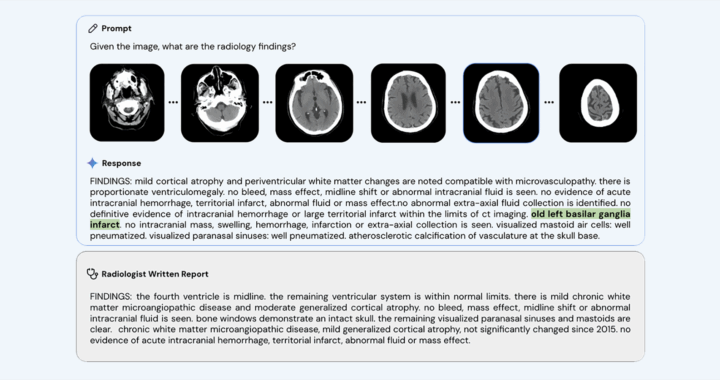

Med-Gemini, the healthcare AI tool from Google, was scrutinized after producing an incorrect anatomical term it called “basilar ganglia” in a radiology example published in May 2024. The phrase conflates two distinct brain structures, the basal ganglia and the basilar artery, to create a nonexistent term. Experts believe it could have significant clinical consequences.

Neurologist Bryan Moore was the first to identify the error. He reported it to Google. The company quietly amended its blog post, replacing “basilar” with “basal,” but left the original research paper unchanged. Google described the issue as a transcription error from training data. Several medical and artificial intelligence experts disputed this characterization.

Clinicians stress that the confusion is far from a trivial matter. The basal ganglia are deep brain structures critical for movement and behavior. The basilar artery supplies blood to the brainstem. Mistaking one for the other could lead to incorrect diagnosis categories and potentially harmful treatment decisions in high-stakes neurological and cerebrovascular cases.

Experts argue that the incident illustrates a common AI failure known as hallucination. It involves an AI system generating plausible but false information. Google maintains it was a typographical issue. Critics contend that the confident use of an invented term demonstrates the inherent risks of deploying large language models in sensitive clinical environments.

Further concerns arise from additional tests of the radiology models from Google, such as its MedGemma, which revealed inconsistent outputs based on prompt phrasing. One example showed changing the wording of a request caused the system to misidentify a clear abnormality as normal. This illustrated how subtle prompt variations can alter diagnostic accuracy.

Medical leaders have voiced strong warnings over such vulnerabilities. Maulin Shah of Providence called the incident “super dangerous,” while Judy Gichova of Emory University emphasized that the AI does not admit uncertainty. Stanford and Duke experts note that subtle errors can quickly propagate through medical records and influence downstream clinical decisions.

Researchers warn of two major hazards. These are automation bias, in which clinicians over-depend on tech after repeated correct outputs, and error propagation, where incorrect information becomes embedded in patient records and later guides decisions. These risks mean a single mistake can cause widespread harm beyond its initial occurrence.

Critics caution that human oversight alone is insufficient to prevent such problems. In busy clinical environments, healthcare workers may overlook errors, particularly when the system has a high rate of accuracy in other cases. Experts insist that system-level safeguards and clear correction protocols are essential before integrating AI deeply into medical workflows.

Proposed solutions include holding healthcare AI to a stricter safety standard than consumer systems, using it solely as a supportive tool, implementing hallucination-detection mechanisms, and requiring explicit uncertainty markers in responses. Experts also call for rigorous pre-deployment trials, independent evaluations, and transparent public corrections.